PITTSBURGH (AP) — The Justice Department has been scrutinizing a controversial artificial intelligence tool used by a Pittsburgh-area child protective services agency following concerns that the tool could lead to discrimination against families with disabilities, The Associated Press has learned.

The interest from federal civil rights attorneys comes after an AP investigation revealed potential bias and transparency issues surrounding the increasing use of algorithms within the troubled child welfare system in the U.S. While some see such opaque tools as a promising way to help overwhelmed social workers predict which children may face harm, others say their reliance on historical data risks automating past inequalities.

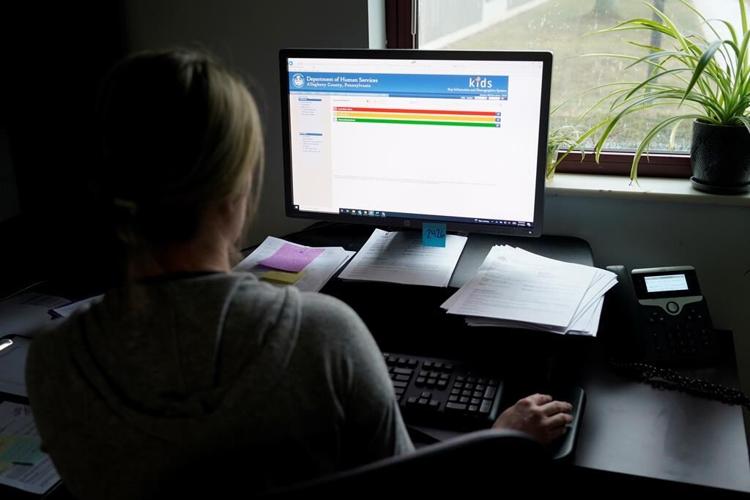

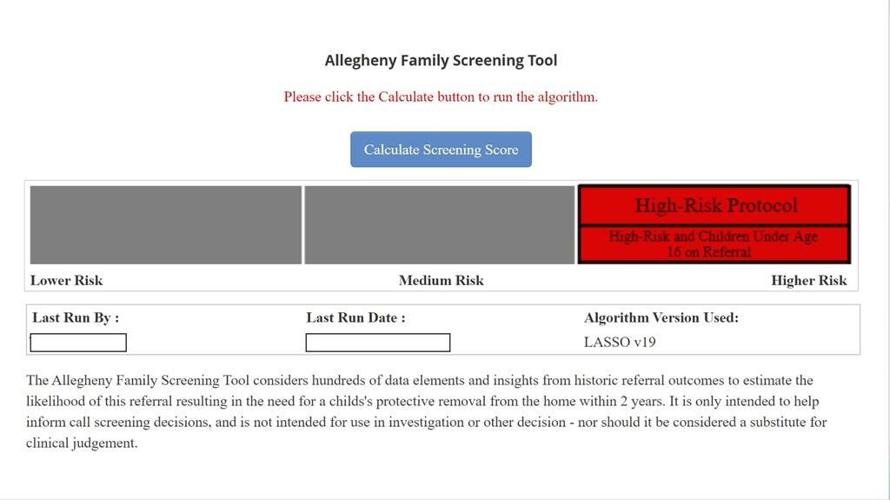

Several civil rights complaints were filed in the fall about the Allegheny Family Screening Tool, which is used to help social workers decide which families to investigate, AP has learned. The pioneering AI program is designed to assess a family’s risk level when they are reported for child welfare concerns in Allegheny County.

Two sources said that attorneys in the Justice Department’s Civil Rights Division cited the AP investigation when urging them to submit formal complaints detailing their concerns about how the algorithm could harden bias against people with disabilities, including families with mental health issues.

A third person told AP that the same group of federal civil rights attorneys also spoke with them in November as part of a broad conversation about how algorithmic tools could potentially exacerbate disparities, including for people with disabilities. That conversation explored the design and construction of Allegheny’s influential algorithm, though the full scope of the Justice Department’s interest is unknown.

All three sources spoke to AP on the condition of anonymity, saying the Justice Department asked them not to discuss the confidential conversations. Two said they also feared professional retaliation.

Wyn Hornbuckle, a Justice Department spokesman, declined to comment.

Algorithms use pools of information to turn data points into predictions, whether that’s for online shopping, identifying crime hotspots or . Many agencies in the U.S. are considering adopting such tools as part of their work with children and families.

Though there’s been widespread debate over the moral consequences of using artificial intelligence in child protective services, the Justice Department’s interest in the Allegheny algorithm marks a significant turn toward possible legal implications.

Robin Frank, a veteran family law attorney in Pittsburgh and vocal critic of the Allegheny algorithm, said she also filed a complaint with the Justice Department in October on behalf of a client with an intellectual disability who is fighting to get his daughter back from foster care. The AP obtained a copy of the complaint, which raised concerns about how the Allegheny Family Screening Tool assesses a family’s risk.

“I think it’s important for people to be aware of what their rights are and to the extent that we don’t have a lot of information when there seemingly are valid questions about the algorithm, it’s important to have some oversight,” Frank said.

Mark Bertolet, spokesman for the Allegheny County Department of Human Services, said by email that the agency had not heard from the Justice Department and declined interview requests.

“We are not aware of any concerns about the inclusion of these variables from research groups’ past evaluation or community feedback on the (Allegheny Family Screening Tool),” the county said, describing previous studies and outreach regarding the tool.

Child protective services workers can face critiques from all sides. They are assigned blame for both over-surveillance and not giving enough support to the families who land in their view. The system has long been criticized for disproportionately separating Black, poor, disabled and marginalized families and for insufficiently addressing – let alone eradicating – child abuse and deaths.

Supporters see algorithms as a data-driven solution to make the system both more thorough and efficient, saying child welfare officials should use all tools at their disposal to make sure children aren’t maltreated.

Critics worry that delegating some of that critical work to AI tools powered by data collected largely from people who are poor can bake in discrimination against families based on race, income, disabilities or other external characteristics.

The AP’s previous story highlighted data points used by the algorithm that can be interpreted as proxies for race. Now, federal civil rights attorneys have been considering the tool’s potential impacts on people with disabilities.

The Allegheny Family Screening Tool was specifically designed to predict the risk that a child will be placed in foster care in the two years after the family is investigated. The county said its algorithm has used data points tied to disabilities in children, parents and other members of local households because they can help predict the risk that a child will be removed from their home after a maltreatment report. The county added that it has updated its algorithm several times and has sometimes removed disabilities-related data points.

Using a trove of detailed personal data and birth, Medicaid, substance abuse, mental health, jail and probation records, among other government data sets, the Allegheny tool's statistical calculations help social workers decide which families should be investigated for neglect – a nuanced term that can include everything from inadequate housing to poor hygiene, but is a different category from physical or sexual abuse, which is investigated separately in Pennsylvania and is not subject to the algorithm.

The algorithm-generated risk score on its own doesn’t determine what happens in the case. A child welfare investigation can result in vulnerable families receiving more support and services, but it can also lead to the removal of children for foster care and ultimately, the termination of parental rights.

that algorithms provide a scientific check on call center workers’ personal biases. County officials further underscored that hotline workers determine what happens with a family’s case and can always override the tool’s recommendations. The tool is also only applied to the beginning of a family’s potential involvement with the child-welfare process; a different social worker conducts the investigations afterward.

The Americans with Disabilities Act prohibits discrimination on the basis of disability, which can include a wide spectrum of conditions, from diabetes, cancer and hearing loss to intellectual disabilities and mental and behavioral health diagnosis like ADHD, depression and schizophrenia.

The ��ɫֱ�� Council on Disability has noted that a high rate of parents with disabilities receive public benefits including food stamps, Medicaid, and Supplemental Security Income, a Social Security Administration program that provides monthly payments to adults and children with a disability.

Allegheny’s algorithm, in use since 2016, has at times drawn from data related to Supplemental Security Income as well as diagnoses for mental, behavioral and neurodevelopmental disorders, including schizophrenia or mood disorders, AP found.

The county said that when the disabilities data is included, it “is predictive of the outcomes” and “it should come as no surprise that parents with disabilities … may also have a need for additional supports and services.” The county added that there are other risk assessment programs that use data about mental health and other conditions that may affect a parent’s ability to safely care for a child.

Emily Putnam-Hornstein and Rhema Vaithianathan, the two developers of Allegheny’s algorithm and other tools like it, deferred to Allegheny County’s answers about the algorithm’s inner workings. They said in an email that they were unaware of any Justice Department scrutiny relating to the algorithm.

The AP obtained records showing hundreds of specific variables that are used to calculate the risk scores for families who are reported to child protective services, including the public data that powers the Allegheny algorithm and similar tools deployed in child welfare systems elsewhere in the U.S.

The AP’s analysis of Allegheny’s algorithm and those inspired by it in Los Angeles County, California, Douglas County, Colorado, and in Oregon reveals a range of controversial data points that have measured people with low incomes and other disadvantaged demographics, at times evaluating families on race, zip code, disabilities and their use of public welfare benefits.

Since the AP’s investigation published, due to racial equity concerns and the White House Office of Science and Technology Policy emphasized that parents and social workers needed more transparency about how government agencies were deploying algorithms as part of

The Justice Department has shown a broad interest in investigating algorithms in recent years, said Christy Lopez, a Georgetown University law professor who previously led some of the Justice Department’s civil rights division litigation and investigations.

In a keynote about a year ago, Assistant Attorney General Kristen Clarke warned that AI technologies had “serious implications for the rights of people with disabilities,” and her division more recently issued guidance to employers saying using AI tools in hiring could violate the Americans with Disabilities Act.

“It appears to me that this is a priority for the division, investigating the extent to which algorithms are perpetuating discriminatory practices,” Lopez said of the Justice Department scrutiny of Allegheny’s tool.

Traci LaLiberte, a University of Minnesota expert on child welfare and disabilities, said the Justice Department's inquiry stood out to her, as federal authorities have largely deferred to local child welfare agencies.

LaLiberte has published research detailing how parents with disabilities are disproportionately affected by the child welfare system. She challenged the idea of using data points related to disabilities in any algorithm because, she said, that assesses characteristics people can’t change, rather than their behavior.

“If it isn’t part of the behavior, then having it in the (algorithm) biases it,” LaLiberte said.

___

Burke reported from San Francisco.

___

This story, supported by the Pulitzer Center on Crisis Reporting, is part of an ongoing Associated Press series, “Tracked,” that investigates the power and consequences of decisions driven by algorithms on people’s everyday lives.

____

Follow Sally Ho and Garance Burke on Twitter at @_sallyho and @garanceburke. Contact AP’s global investigative team at Investigative@ap.org or